We introduce a neuro-symbolic transformer-based model that converts flat, segmented facade structures into procedural definitions using a custom designed split grammar.

To facilitate this, we first develop a semi-complex split grammar tailored for architectural facades and then generate a dataset comprising of facades alongside their corresponding procedural representations. This dataset is used to train our transformer model to convert segmented, flat facades into the procedural language of our grammar. During inference, the model applies this learned transformation to new facade segmentations, providing a procedural representation that users can adjust to generate varied facade designs.

This method not only automates the conversion of static facade images into dynamic, editable procedural formats but also enhances the design flexibility, allowing for easy modifications and variations by architects and designers. Our approach sets a new standard in facade design by combining the precision of procedural generation with the adaptability of neuro-symbolic learning.

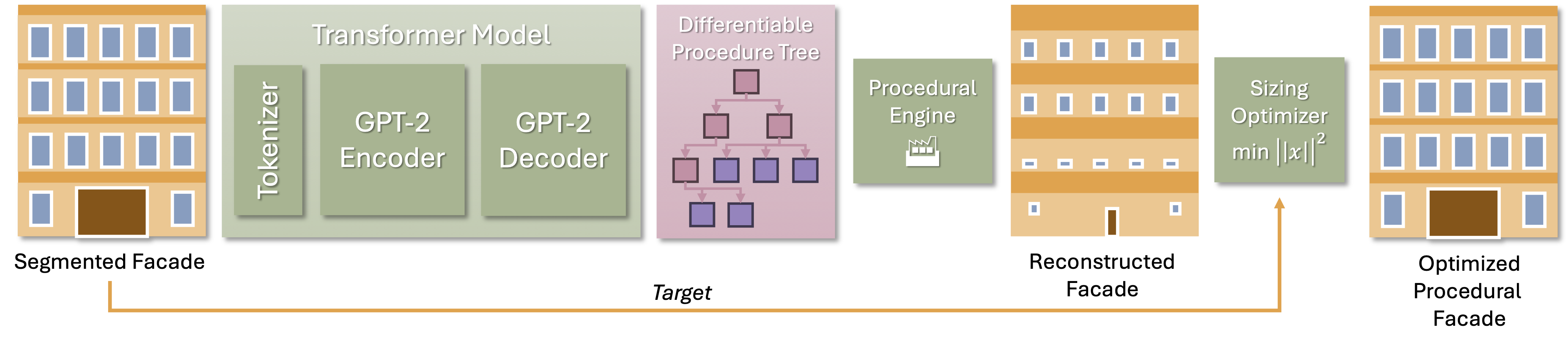

First, a segmented facade that is represented by non-overlapping rectangles of various classes converted into sequences of tokens representing geometric properties. These tokens are then fed into a custom GPT-2 encoder, which processes the input sequences and transforms them into embeddings. These embeddings are subsequently passed to the sequence generator, a custom GPT-2 decoder, predicts the next token one token at a time. In this process we use cross-attention to integrate the encoded information with partially generated sequences (procedures). Since the procedures take the form of rooted trees, after the tokens are decoded, we employ the breadth-first traversal method that produces a sequence of nodes, ensuring consistency in sequence representation. This procedure, when executed, regenerates the input’s structure, allowing for further customization.

After inference, the procedures undergo optimization to fine-tune the sizing parameters, ensuring accurate reproduction of the input facade segmentation. This is achieved through gradient-based optimization using a differentiable loss function that measures the difference between the input segmentation and the generated structure. The model demonstrates robust performance, with optimization techniques significantly improving accuracy and reducing potential training loss. This pipeline efficiently combines transformer-based architectures with neuro-symbolic inference, enabling the generation of detailed and customizable facade representations.@inproceedings{plocharski2024facaid,

author = {Plocharski, Aleksander and Swidzinski, Jan and Porter-Sobieraj, Joanna and Musialski, Przemyslaw},

title = {Fa\c{c}AID: A Transformer Model for Neuro-Symbolic Facade Reconstruction},

year = {2024},

isbn = {9798400711312},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3680528.3687657},

doi = {10.1145/3680528.3687657},

booktitle = {SIGGRAPH Asia 2024 Conference Papers},

articleno = {123},

numpages = {11},

keywords = {neurosymbolic, procedural generation, facade modeling, transformers},

location = {Tokyo, Japan},

series = {SA '24}

}